AI Search Visibility

Why visibility now depends on being understood, not just ranked

Some shifts in AI arrive with major launches and obvious behaviour changes. Others slip in quietly, reshaping habits we already take for granted.

This edition is a little different. Once a month, we pause the weekly roundups and focus on one defining shift in AI, unpacking it with more depth and clarity.

In Perspective: This week, we’re looking at AI search visibility and what happens when search stops being a gateway and starts becoming the place where answers are resolved. As links give way to summaries and chat-based responses shape what we see first, visibility becomes less about ranking and more about how systems understand and represent information.

It’s a huge change and it’s already altering how discovery works for people, platforms, and brands alike.

Over the last year, search has started to change in a way that is easy to miss if you are only looking at traditional SEO metrics.

People are still searching. Google still handles the majority of global query volume. Rankings still update. On the surface, the system looks normal.

What has changed is where the answer now forms.

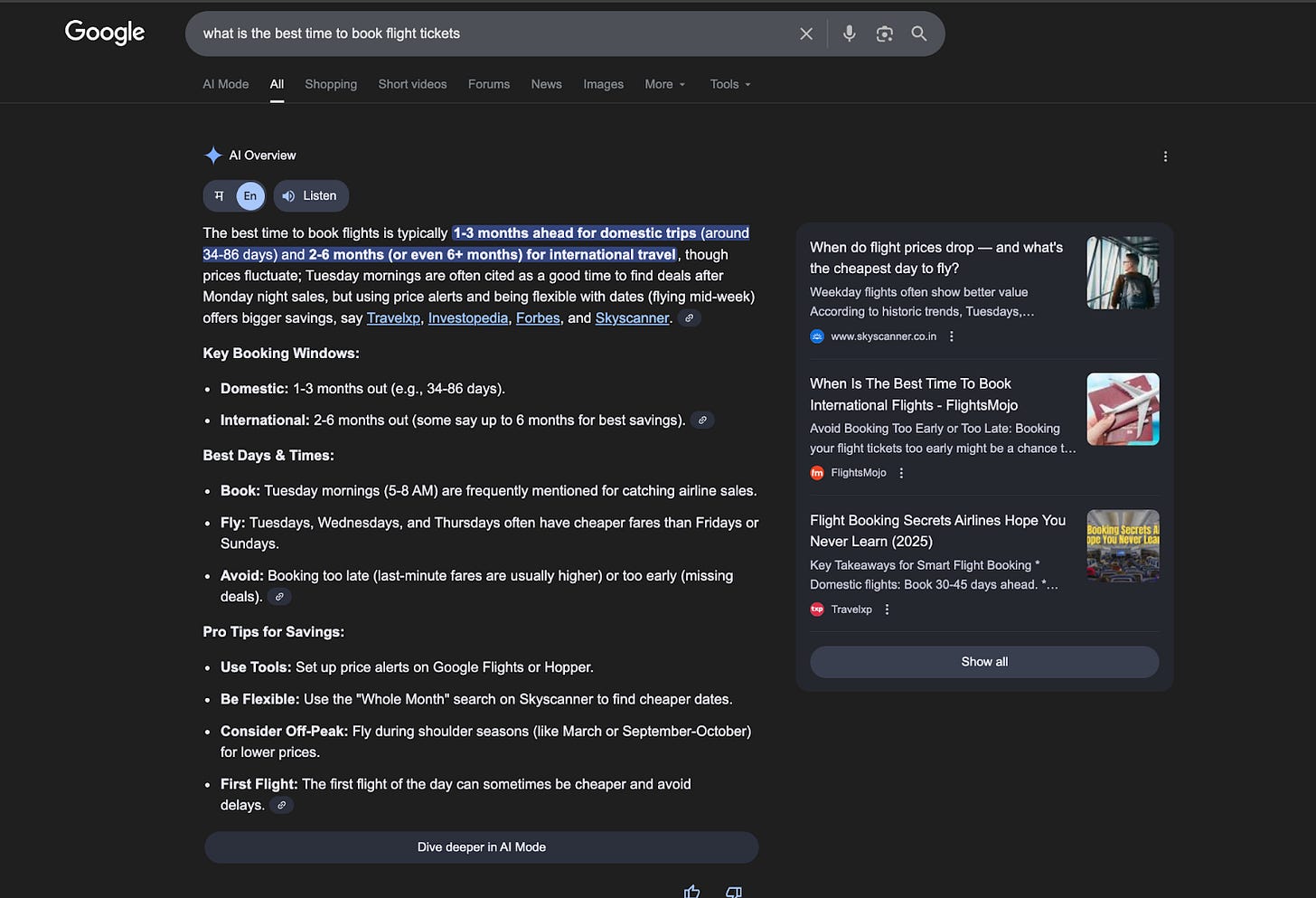

Search is no longer limited to a list of links that sends users elsewhere to think, compare, and decide. Increasingly, that work is happening inside first-party AI systems. Google’s AI Overviews and AI Mode now generate explanations directly inside the search interface. At the same time, a growing set of users start certain information and comparison queries inside tools like ChatGPT and Perplexity, where the default output is an answer, not a destination.

This does not replace traditional search behaviour. It layers on top of it.

But that layer changes how decisions are made.

When an AI system summarises options, compares tools, or explains a concept before a click happens, the role of rankings shifts. Pages can still rank. Keywords can still look healthy. And yet, fewer users click through, because the system has already done part of the cognitive work that used to happen on the website.

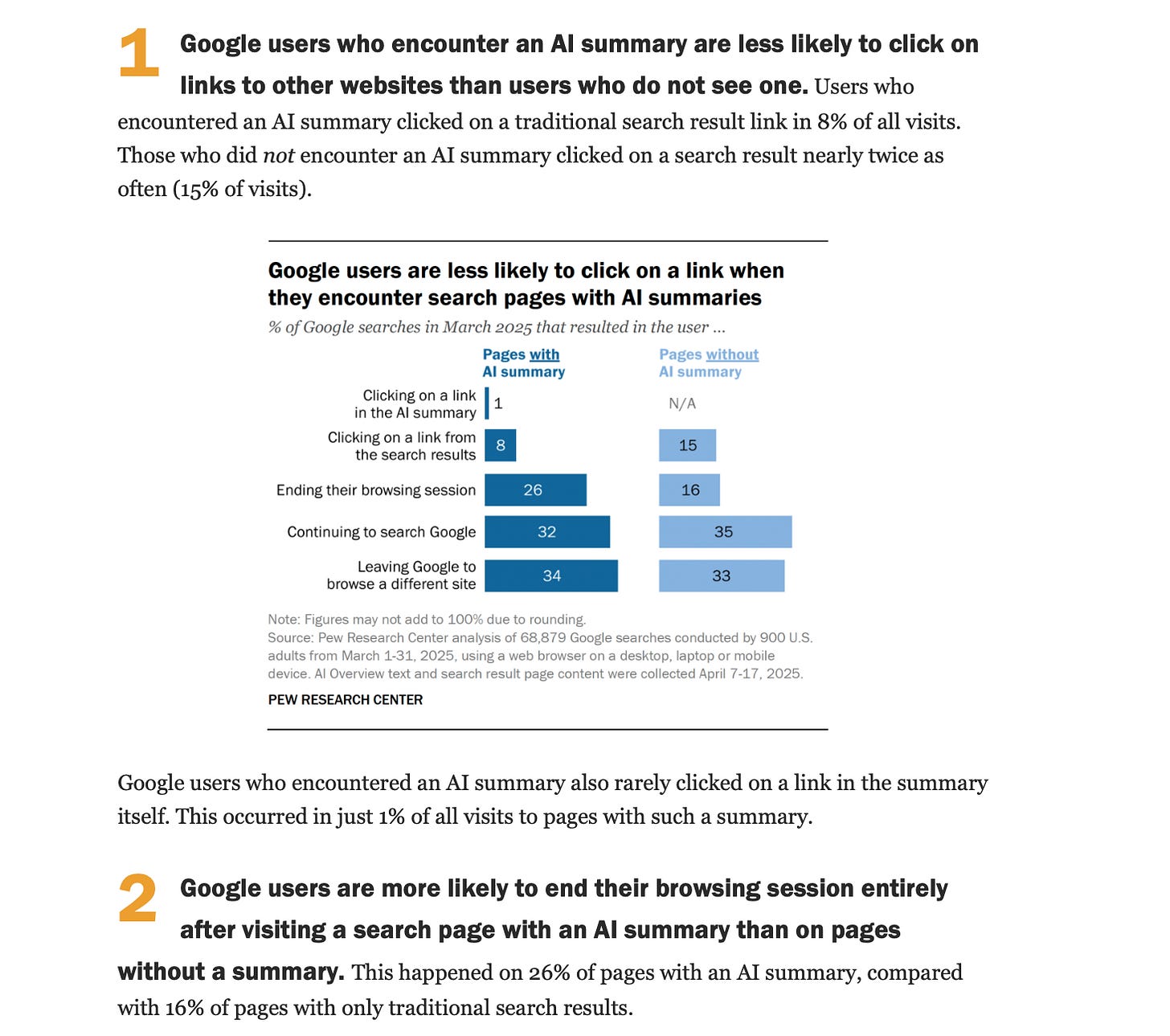

Recent research shows that when Google’s AI summaries appear in search results, users are far less likely to click through to websites than traditional search. In a Pew Research Center analysis of user behaviour, only 8% of visits resulted in a click to a traditional search result, compared with 15% when no AI summary appeared. And just 1% clicked links within the summaries themselves.

This is why many teams are seeing a strange pattern emerge. Rankings remain stable. Impressions stay flat or grow. Traffic quietly reduces.

Nothing is broken. The system is simply behaving differently.

Search is no longer only a gateway. It is increasingly the place where answers are formed, options are narrowed, and conclusions are reached. And once that happens, the signals teams have relied on for years begin to behave in ways that feel unfamiliar.

The shift is subtle. But its impact is not.

What AI search visibility actually is

AI search visibility is not about ranking higher on a page.

It’s about whether an AI system understands your brand, product, or content well enough to include it when it generates an answer.

Traditional search engines were designed to retrieve documents and rank them. The work of interpretation was left to the user. AI search systems are designed to produce responses. They summarize, compare, and synthesize information into something that feels complete.

That difference matters because it changes the unit of competition.

In classic SEO, you competed for placement in a list. In AI-mediated search, you compete for inclusion inside an answer. If a brand or product is not something the system can confidently interpret, it does not matter how well the underlying page ranks. It simply does not appear.

Visibility is now shifting from position to interpretation.

This is often described as a move beyond SEO, but that framing is misleading. What is emerging is a stack.

SEO still governs whether content is discoverable and trusted as a source. Systems still need to crawl, index, and understand what a page is about.

AEO, or Answer Engine Optimization, determines whether that content can be used as an answer. Can a system extract a clear explanation from the page without guessing, stitching, or misrepresenting what is being said?

GEO, or Generative Engine Optimization, sits one level above that. It shapes whether a brand appears at all in generated responses, how often it is mentioned or cited, and how it is framed across different systems.

These are not competing disciplines. You cannot do GEO without SEO, and you cannot do AEO by chasing keywords. The brands that show up consistently are not the ones producing the most content, but the ones producing the clearest sources of truth.

Where it stands now

Most companies still evaluate search performance through familiar proxies. For them, rankings indicate position, impressions signal demand, click-through rate shows whether a result acquired attention, and traffic translates to volume.

Those metrics worked because search behaviour itself was predictable. Users searched, scanned a list, clicked, and explored.

AI answers interrupt that flow.

Users still search, often in the same places, but clicking is no longer the default next step. When an answer feels sufficient, the journey ends inside the interface.

This explains why the change feels confusing from the inside. A page can rank well and still lose traffic, not because it failed, but because it was never meant to be the destination anymore. It was a source.

There is a useful parallel here with social platforms. On Instagram or LinkedIn, visibility stopped being primarily about who followed you and became about whether the platform chose to distribute you. Search is entering a similar phase. The platform is no longer only indexing information and routing attention. It is deciding when a question is considered resolved.

What gets better for people

From a user perspective, the appeal of AI-driven search is real and measurable, even if it has not fully replaced traditional search yet.

Surveys conducted with people who regularly use AI search tools find that a large majority prefer the AI experience for certain kinds of queries. In one such survey, more than 80 % of respondents said they found AI-powered search tools more efficient than traditional search engines. Not just faster, but more useful for synthesising answers and guiding decisions.

At the same time, broader population studies show that users are adopting AI search behaviour in ways that coexist with classic search engines. In a recent poll of U.S. adults, about 60% of people overall reported using AI to search for information “at least some of the time,” and that figure rose to nearly three-quarters among those under 30.

Other surveys paint a more nuanced picture: roughly a quarter to a third of users in major markets now use AI tools instead of traditional search engines for at least some queries, with younger demographics driving much of that shift.

This tells us two things that are both true right now:

Many users like the experience of AI search because it reduces effort and feels sufficiently complete for common decision-shaping questions.

A large portion of people still rely on traditional search for other query types, especially when they want to browse multiple sources, confirm accuracy, or access the latest news, and this varies substantially by age and context.

In other words, the behaviour we see is additive, not replacement at scale: people use both depending on their goals, query type, and personal preference.

AI search reduces the work people used to do manually. It summarizes long explanations, compares options without opening multiple tabs, and answers follow-up questions in the same place.

For everyday queries like:

“best time to book flights”

“which phone under a certain budget is better”

“how to choose between two tools”

most people are not looking to research deeply. They want a reasonable answer and a way to move on. AI search delivers exactly that.

This is why adoption is happening quietly. People are not dramatically changing behaviour. They are simply doing less work to reach the same outcome.

It also explains why teams often feel tension in metrics. People may like the AI answer they receive because it feels efficient, but if it never results in a click to a brand’s website, traditional SEO metrics will not capture that satisfaction.

What gets worse for people

The cost of this shift shows up slowly.

When answers are generated instead of explored, the system narrows the field before users ever see alternatives. Ask an AI system for the best project management tool for a small team, and the same few products tend to appear. The framing is familiar. Strengths are summarized. Trade-offs are acknowledged just enough to feel balanced. The answer feels complete.

What stays invisible are the many specialized tools that might actually be a better fit. Not because they perform poorly, but because they are harder to summarize, discussed less often in widely trusted sources, or absent from the places the system draws confidence from.

Over time, this repetition compounds. Certain brands become defaults. Others struggle to enter the conversation at all. Discovery narrows, not through intent, but through reinforcement.

There is a second, subtler effect.

AI answers are diplomatic by design. When users ask about controversial or value-laden topics, responses tend to converge toward balance and caution. Strong critiques are softened. Adversarial perspectives lose force. The result is not necessarily incorrect information, but a narrowing of what is expressed by default.

This shapes how opinions form. Not by telling people what to think, but by quietly constraining the range of perspectives they encounter unless they deliberately push beyond the first answer.

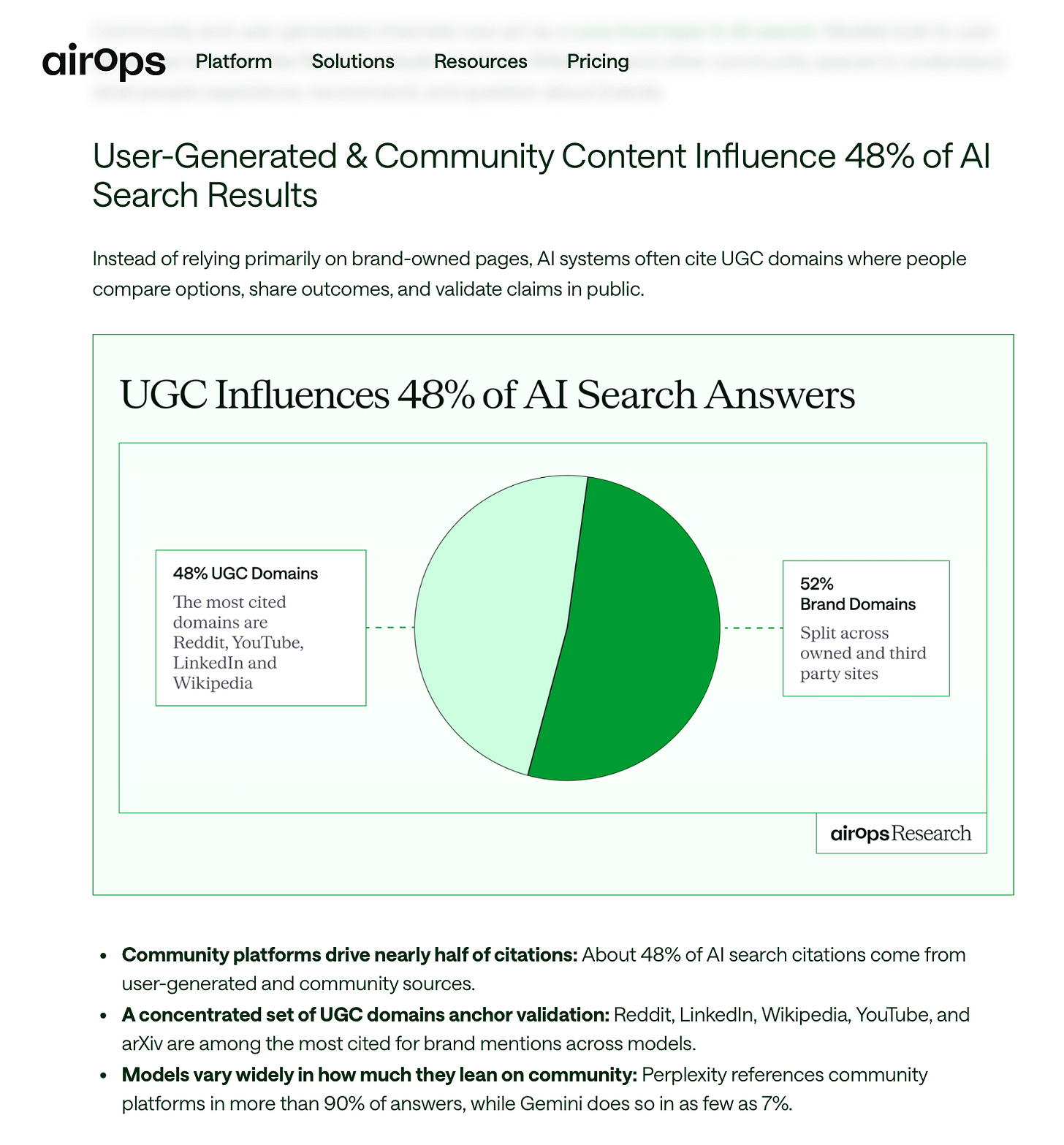

One reason this happens lies in where AI systems learn trust.

Increasingly, that layer sits outside brand-owned websites. Community discussions, Reddit threads, YouTube explainers, Wikipedia pages, and practitioner blogs play an outsized role because they contain firsthand experience, disagreement, and concrete comparisons.

A polished brand page that avoids specifics can be difficult for a system to interpret. A messy Reddit thread full of people arguing about real trade-offs is often easier to synthesise.

As a result, brands no longer control their narrative primarily through what they publish themselves. Their visibility is increasingly shaped by what is said about them in public.

Research from AirOps adds another layer to this shift. Their analysis suggests that around half of AI search citations now come from community and user-generated sources, rather than brand-owned websites.

That means:

brands lose control over how they are described

the “truth layer” shifts to Reddit threads, YouTube explainers, LinkedIn posts, and Wikipedia summaries

a strong community narrative can outweigh a polished brand website

The risk is not that AI search consistently gives wrong answers.

It’s that it gives answers that are good enough so often that users stop noticing what they are not seeing.

What it means for platforms

When we talk about platforms here, we’re referring to search and answer platforms like Google Search, ChatGPT, Perplexity, and Gemini. These systems are now converging on a similar outcome, even though they arrive there in different ways.

The moment we are talking about is this: search no longer needs to send users elsewhere to be considered complete.

For traditional search platforms like Google, this represents a clear shift in role.

For most of its history, Google’s influence came from routing attention. It decided which links appeared first, how they were presented, and where users went next. Even when it introduced featured snippets or knowledge panels, those were usually limited to simple facts. For complex or judgment-based queries, users still had to click through to other sites to finish the task.

That assumption has now changed.

With AI Overviews and AI Mode, Google increasingly resolves multi-step questions directly inside the search interface. Take a query like “how to file income tax returns.” Instead of sending users to government pages or explainer blogs, the platform now often explains the steps itself. Links still exist, but they are optional. For many users, the search ends without a click.

At this point, Google is no longer primarily deciding where you should go. It’s deciding what information is enough.

This shift also changes how platform power works.

At first, adding AI looks like deeper monopolization. Large platforms now sit closer to the moment of decision. They generate the answer, shape the framing, and often resolve the search without sending users elsewhere.

At the same time, AI weakens the old form of control.

In a link-based system, platforms monopolized attention by deciding which sites received traffic. In an answer-based system, platforms depend on a broader and more unstable source layer. AI systems pull from documentation, community discussions, videos, forums, and third-party analysis that they do not own and cannot fully control.

The result is a split.

Platforms gain power over when a search ends, but lose exclusive control over where truth comes from. Power concentrates at the level of answer formation, but fragments at the level of source creation. This is why competing systems often surface similar answers while relying on overlapping sources.

Monopolization does not disappear. It changes form.

AI-native platforms like ChatGPT, Perplexity, and Gemini arrive at this same moment differently.

They were never designed to route traffic. From the start, their role was to generate answers directly. What has changed is not their function, but their scale. As more users start their searches inside these tools, their answer-first model begins to shape discovery at a level previously reserved for search engines.

Despite different histories, both types of platforms now share the same responsibility.

They do not just organise information.

They decide when the search is over.

What this means for websites and content sources

When search was link-based, websites were destinations. Even if a page ranked lower, a compelling explanation could still win once a user arrived.

In an AI-mediated search, many websites are no longer places users visit. They are sources the system reads from.

Instead of asking, “Is this page good enough for a user to read?” AI search systems ask, “Can I confidently extract a clear explanation from this page?”

If the answer is yes, the content may be reused, summarised, or cited. If not, the page may never surface at all, even if it ranks well.

For example, imagine a user asks, “How does capital gains tax work?”

One website answers this with a long, narrative blog post. The definition appears midway through the page. The necessary information is scattered across paragraphs. Important caveats are buried near the end. The page reads well for humans, but it requires patience.

Another site answers the same question with a clear opening definition, followed by a short section explaining when capital gains apply, then a bullet-point breakdown of tax rates and exceptions. The language is plain. The structure is obvious.

In a traditional search, both pages might rank and receive traffic.

In an AI search, the second page is far more likely to be reused.

Not because it is better written, but because it is easier to interpret without error.

AI search rewards explainability over engagement.

This also elevates a specific layer of the web. Explainers, comparison pages, documentation, and community discussions increasingly shape what AI systems say, even when the brand itself is not the primary voice.

For content creators, success is no longer only about attracting clicks but about being clear enough to be used.

What it means for brands

For brands, AI search visibility introduces a new and uncomfortable reality.

Visibility is no longer just about where you rank. It is about whether you are spoken about at all, how you are described, and what role you play in the answer that forms before a click.

Rankings measure visibility in a list. Mentions and citations measure visibility in an answer.

This is why traditional SEO metrics now feel incomplete, and why observing how brands appear across AI systems has become its own layer of insight.

When people search inside ChatGPT, Perplexity, or Google’s AI experiences, the system decides which brands to mention, how to describe them, and what role they play in the answer. Often, users never see alternatives.

This is also where tools like Radix and Searchable, which I have covered in earlier editions, become relevant. They do not optimize content. They observe a different layer:

whether a brand appears in AI-generated answers

how often it is cited versus loosely mentioned

how it is framed across different systems

This is not incremental SEO. It’s a new visibility surface.

What brands will do next

Once this reality sinks in, the response becomes clearer.

Brands will invest less in producing more content and more in producing fewer, clearer sources of truth. Instead of overlapping articles optimised around slight keyword variations, they will focus on canonical explanations that can travel across systems without losing meaning. Strong structure, consistent language, and explicit positioning will matter more than volume.

But clarity alone is no longer enough.

Those explanations now need to exist across multiple surfaces and formats. Text, video, documentation, comparisons, community discussions. Not because brands need to “be everywhere,” but because AI systems learn by triangulating across channels. A brand that is clearly described on its website but absent or inconsistent elsewhere is harder to include with confidence.

This shifts distribution from chasing reach to reinforcing meaning.

Brands will pay closer attention to where AI systems learn trust. Community platforms, expert commentary, and credible third-party explainers increasingly shape how brands are described, often more than brand-owned content. Being cited consistently across these surfaces becomes as important as ranking on a page.

Optimization moves away from chasing keywords and toward earning inclusion across formats, channels, and systems.

When does this become “normal”

We are already past experimentation.

AI answers are live at scale. Conversational search is being integrated into core workflows. The next phase is not adoption. It’s adjustment.

The limiting factors will not be technology. Rather, they’ll be trust, regulation, and how much decision-making users are willing to delegate without explanation.

My take

I don’t see this as the end of SEO. I see it as a correction.

Ranking still matters, but it is no longer the outcome. Being clearly understood is. Once answers are generated instead of discovered, brands compete on clarity, not volume.

What changes next is distribution.

It is no longer enough for a brand to explain itself well on its own website. AI systems learn by comparing signals across formats and platforms. If your product is clearly described in one place and vague or inconsistent everywhere else, the system will default to whatever narrative is easiest to assemble.

That means brands need to focus on being consistently citable. Across text, video, documentation, comparisons, and community discussion. Not by being everywhere, but by saying the same thing wherever they appear.

The work ahead is not chasing new optimisation tricks. It is simplifying what you publish, being explicit about trade-offs, and making your story easy to repeat without distortion.

Once search starts speaking on your behalf, the question is no longer how well you rank.

It’s whether the system can explain you correctly when you’re not there.

In the Spotlight

Recommended watch: How to Win in AI Search (Real Data, No Hype) | Ryan Law (Ahrefs)

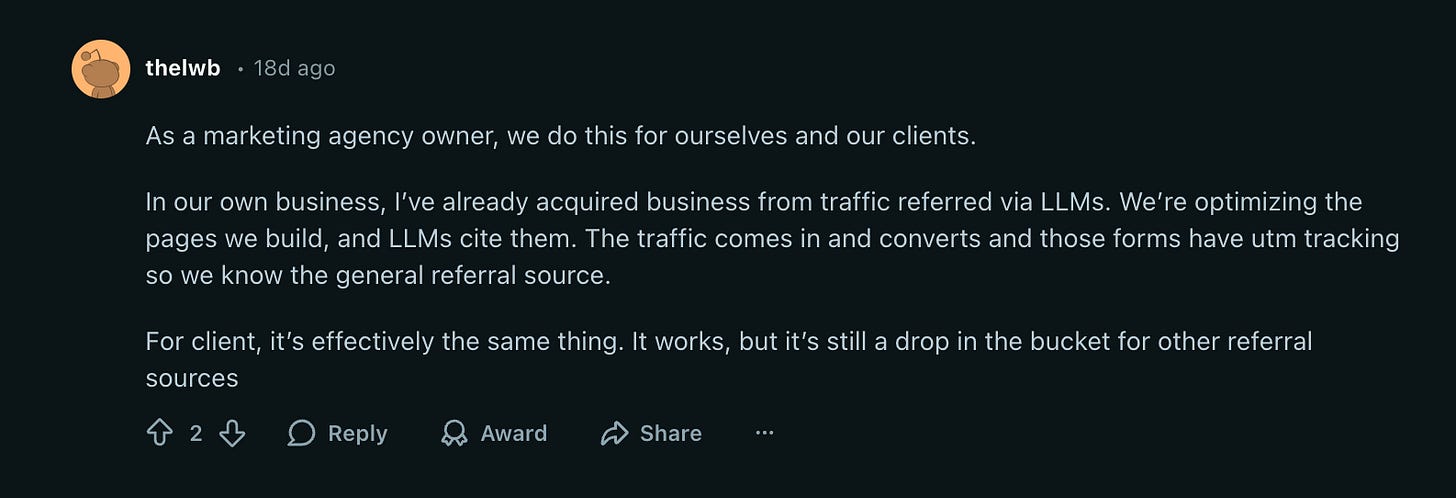

I liked this episode because it’s one of the few AI search conversations that feels grounded in reality, not hype. Ryan makes a simple point: there are only a handful of levers you can actually influence, and most “rank in ChatGPT” advice is noise. The biggest takeaway is that mentions across the web matter more than ever. If LLMs learn what your brand is from what others say about you, then citations and co-mentions start behaving like the new backlinks. It’s also refreshingly honest about the messy part: the rules are still changing, so anyone selling certainty should make you cautious.

ChatGPT alone gets over 2.5 billion prompts every single day. And allegedly, visitors from ChatGPT are converting much better than those coming from Google

– Tim Soulo • (Intro, ~0:07)

Closing Notes

That’s it for this edition of AI Fyndings. From search shifting from exploration to resolution, to answers being shaped before we ever click, to platforms and brands adapting to a world where systems increasingly decide what information is sufficient, this week was about visibility quietly moving out of dashboards and into the logic of AI.

Thank you for reading. I hope this deep dive helped put language and structure around a shift many of us are already feeling. See you next week with more stories, tools, and ideas shaping how AI continues to change how we discover, decide, and understand the world around us.

With love,

Elena Gracia

AI Marketer, Fynd