Ideogram, EMO AI, LTX.studio, Google Genie, and Much More in The AI World

Welcome to the ninth edition of the PixelBin Newsletter. Every Monday, we send you one article that will help you stay informed about the latest AI developments in Business, Product, and Design.

In Today’s Newsletter

🔥 Top AI Highlights

🌟 EMO AI by Alibaba: Transform Images to Lifelike Videos

🎨 LTX.studio: The Next Gen Visual Storytelling

🚀 Google DeepMind’s 'Genie': AI That Generates Video Games

🔥 AI in the Fast Lane

Top AI Highlights

Ideogram rolled out a new 1.0 version of its text-to-image model. Most notable, are the incredible details in AI-generated text and a new feature called Magic Prompt to help create prompts. Read More…

Apple quietly released subtle AI updates to iOS. Between moves in AI research and recent comments, we are about to see a major AI update in Siri soon. Read More…

Klarna just released a blog stating that they are using AI to replace 700 customer service rep workers. Read More…

Adobe's Project Music GenAI Control redefines music production, where AI tunes into your creativity. Read More…

Pika Labs has introduced a new lip sync feature to its AI video generator platform, allowing users to give words to AI characters and sync their lip movements with the desired speech. Read More…

OpenAI has partnered with the robotics startup Figure to develop specialized AI models for Figure's humanoid robots. Read More…

🌟 Product Innovation through AI

EMO AI by Alibaba: Transform Images to Lifelike Videos

Alibaba's Institute for Intelligent Computing unveils EMO, a cutting-edge system that transforms still portrait photos into lifelike videos.

EMO, short for Emote Portrait Alive, is a cutting-edge artificial intelligence system that transforms still portrait photos into lifelike videos of individuals speaking or singing. The innovative technology behind EMO operates on a direct audio-to-video synthesis approach, setting it apart from traditional methods that rely on 3D models or facial landmarks.

Audio-to-Video Synthesis: Utilizes a diffusion model to convert audio directly into video frames, capturing high-quality speech nuances and facial expressions.

Training Data: Built on a vast dataset of diverse audio and video content, enabling EMO to emulate human expressions accurately.

Attention Mechanisms: Employs reference-attention and audio-attention mechanisms to align facial animations with audio inputs, enhancing emotional resonance.

Expressiveness and Realism: Achieves unparalleled expressiveness and video quality by training on over 250 hours of diverse footage, setting new standards in digital communication.

Ethical Considerations: Recognizes the potential for misuse, with ongoing efforts to ensure responsible innovation and the ethical application of this powerful technology.

Potential Applications of EMO

Alibaba's EMO, known for its advanced audio-to-video synthesis technology, offers a wide range of potential applications across various industries and settings.

Social Media: Creating videos for social media channels becomes much easier and personalized. It can be adopted as a feature where highly personalized videos can be created from photos and audio samples.

Education: Using EMO videos, you can create engaging and supportive environments tailored to individual learning needs. Through interactive sessions, they assist in cultivating students' emotional intelligence, offering personalized support.

Customer Service: By discerning and reacting to customer emotions, they deliver tailored and empathetic service, significantly elevating customer satisfaction through personalized assistance.

Entertainment: EMO can enhance entertainment by providing dynamic and interactive experiences in gaming, virtual reality, and immersive storytelling.

Marketing: In marketing and advertising, EMO can create more engaging and personalized content, potentially increasing campaign effectiveness and customer engagement.

EMO v/s PIKA Labs Lip Sync

EMO, developed by Alibaba's Institute for Intelligent Computing, and Pika Labs' Lip Sync feature are both AI-powered tools designed to enhance video content by synchronizing spoken dialog with characters' mouth movements. However, there are some key differences between the two:

Training Data:

EMO is trained on a vast and diverse dataset of audio and video content, including speeches, film clips, and singing performances in multiple languages, allowing it to produce convincing speaking and singing videos in various styles.

Pika Labs' Lip Sync feature, on the other hand, utilizes technology from ElevenLabs to support text-to-audio and uploaded audio tracks, enabling users to customize the voice style of their AI-generated video characters.

Application:

EMO is a standalone product that uses AI to make still images talk.

Pika Labs' Lip Sync feature is designed to augment existing video content by synchronizing spoken dialog with characters' mouth movements.

Realism and Engagement:

EMO's development is a significant advancement in audio-driven talking head video generation, surpassing existing methodologies in terms of expressiveness and realism.

Pika Labs' Lip Sync feature also enhances the realism and engagement of AI-generated videos by synchronizing spoken dialog with video characters' mouth movements.

🎨 Design Meets AI

LTX.studio: Revolutionizing Visual Storytelling for the Digital Age

LTX.studio, developed by Lightricks, is a significant advancement in filmmaking. This cutting-edge platform is powered by artificial intelligence, designed to fundamentally change how creators realize their artistic visions. With LTX.studio, generating scripts, storyboards, and engaging short video clips becomes a seamless process, all initiated by a simple prompt.

Key Features:

AI-Powered Creativity: You can transform your script ideas into comprehensive video productions with advanced AI assistance. This includes creating characters, adjusting camera angles, and adding special effects to your videos.

Comprehensive Workflow: From ideas to final production, LTX.studio provides a full suite of tools. Customize scenes, characters, styles, and settings with ease, making it an ideal environment for filmmakers, pre-production teams, and advertising agencies.

Collaboration and Exporting: Enhance teamwork with features that allow for easy preview and export of projects. This facilitates external feedback and streamlines the collaborative process.

Advanced 3D Generative Technology: LTX.studio comprises a suite of 3D generative technology and AI models. These powerful tools offer users complete control over the visual and narrative aspects of their videos, automating the creation of character profiles, scenes, music, sound effects, and dialogs.

🚀 Innovations in AI

Google DeepMind’s 'Genie': AI That Generates Video Games

Google DeepMind's new generative model, Genie, is an innovative AI system that can create playable video games resembling classic 2D platformers like Super Mario Bros.

Genie can generate games from short descriptions, hand-drawn sketches, or photos, running at one frame per second.

Unlike previous models, Genie was trained on 30,000 hours of video footage alone, learning to change the game character's position based on eight possible actions. This approach allows for potential training data from existing online videos. The AI generates each frame of the game in real time as the player interacts with it.

How Does Genie Work?

Google DeepMind's Genie operates on a novel principle, drawing insights from a vast dataset of 200,000 hours of unlabelled video footage, mainly from 2D platformer games. Unlike previous AI models, Genie learns through observational learning rather than explicit instruction. The mechanics behind Genie's capabilities unfold in three stages:

Video Tokenizer: Breaks down complex video data into manageable "tokens”.

Latent Action Model: Analyzes transitions between frames to identify fundamental actions crucial for gameplay like jumping and running.

Dynamics Model: Predicts subsequent frames based on current gameplay, ensuring a seamless gaming experience.

Genie generates playable video games from short descriptions, hand-drawn sketches, or photos by learning which possible actions would cause the game character to change its position in a video. It can create each frame of the game in real-time as the player interacts with it, updating the image based on the player's actions. While Genie is not currently available to the public, it showcases a deep understanding of physics and game mechanics through unsupervised training.

⚙️ Tools to Supercharge Your Productivity

5 AI Tools That Would Make You Smarter

WatermarkRemover.io: Remove all kinds of text watermarks from your images using AI.

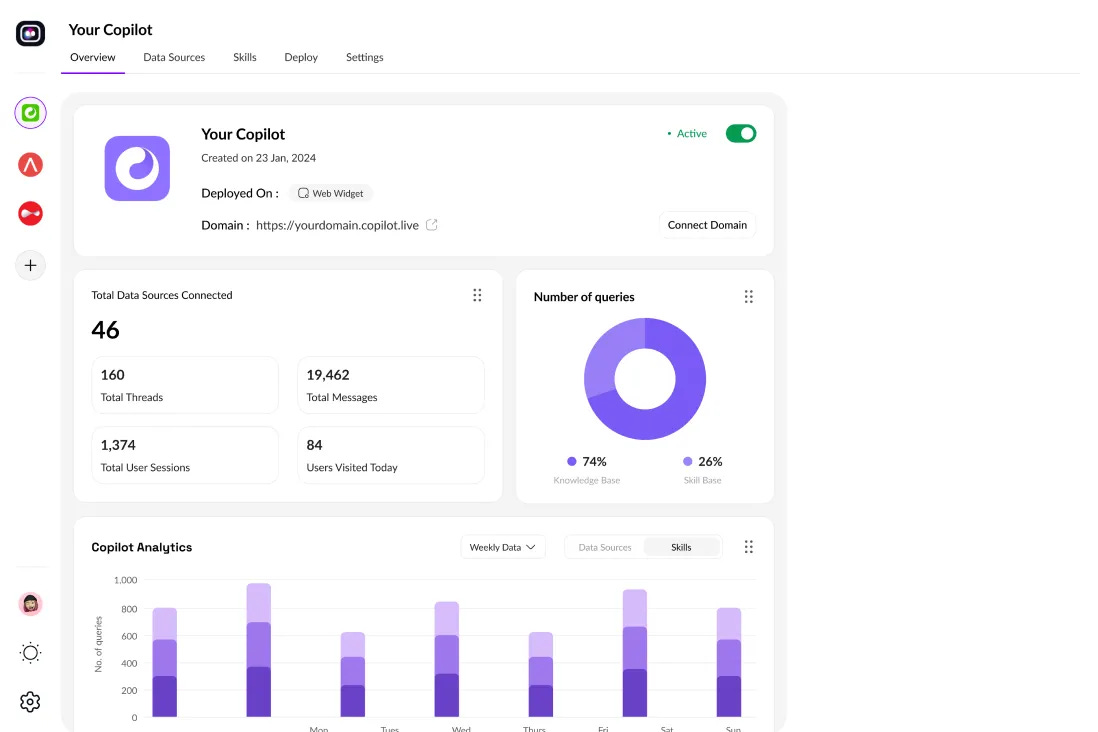

Copilot.live: Create a custom chatGPT for your website with Copilot and let it engage with your users, handle customer support, generate leads.

Articly AI: A fully automated AI-powered blog post writer. You can replace your content writer with this AI.

Rupert AI: Create & Pre-test your assets in seconds with deep learning and cognitive science models built with millions of human reactions.

ThinkerNotes: Leverage the books you read to create original content ideas.