From Assistants to Agents: AI Starts Taking the Lead

From Mailmodo to Shopify to Meta, AI is taking on real roles - marketer, seller, creator. This week’s AI Fyndings explores what happens when it starts to do more than just assist.

Welcome to AI Fyndings!

With AI, every decision is a trade-off: speed or quality, scale or control, creativity or consistency. AI Fyndings discusses what those choices mean for business, product, and design.

In business, Mailmodo’s AI campaign agent takes on the repetitive side of email marketing.

In product, Shopify and OpenAI bring the storefront directly into chat.

In design, Meta’s Vibes gives AI video its own sense of emotion and mood.

AI in Business

Mailmodo’s AI Campaign Agent Joins the Marketing Team

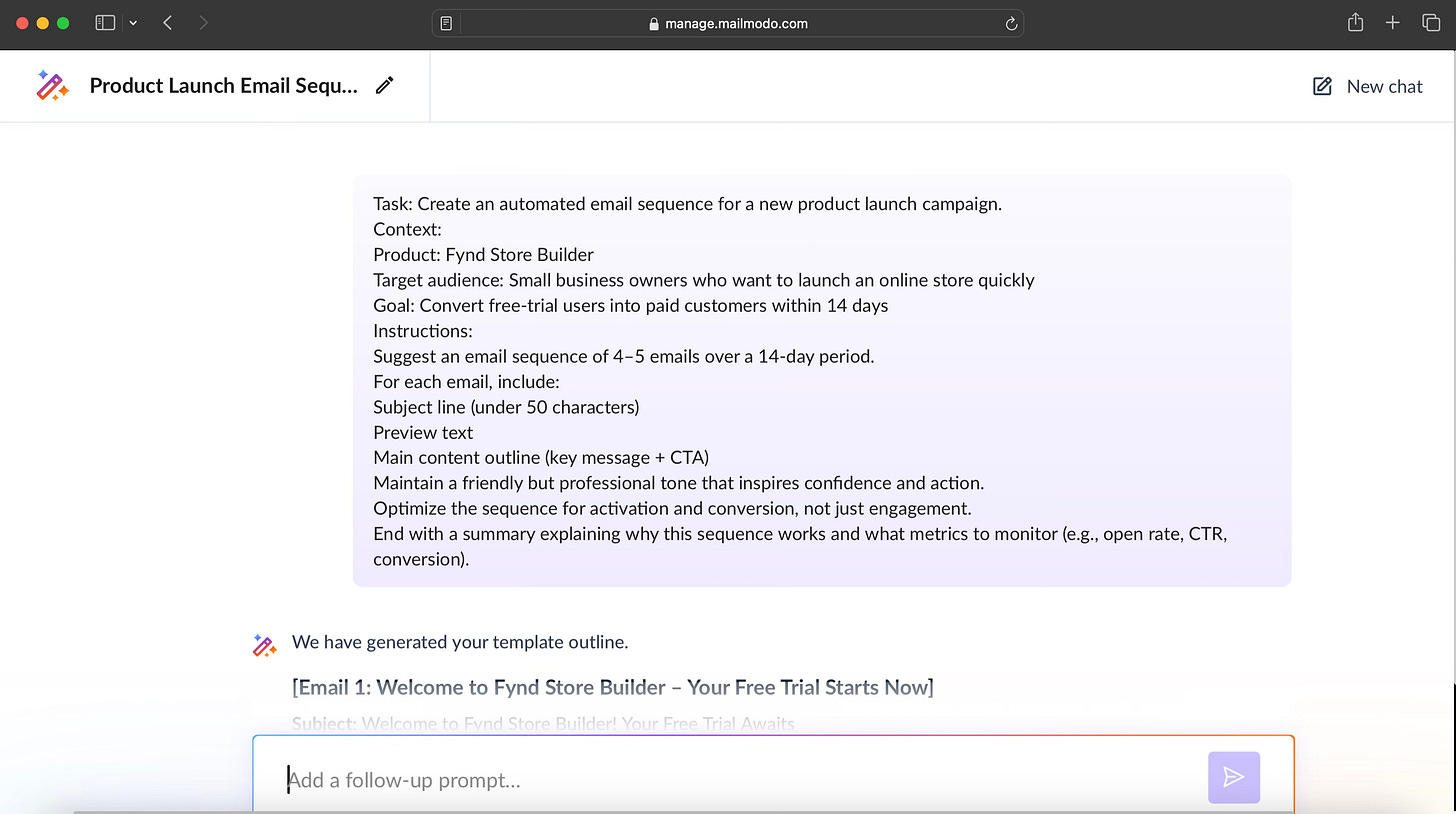

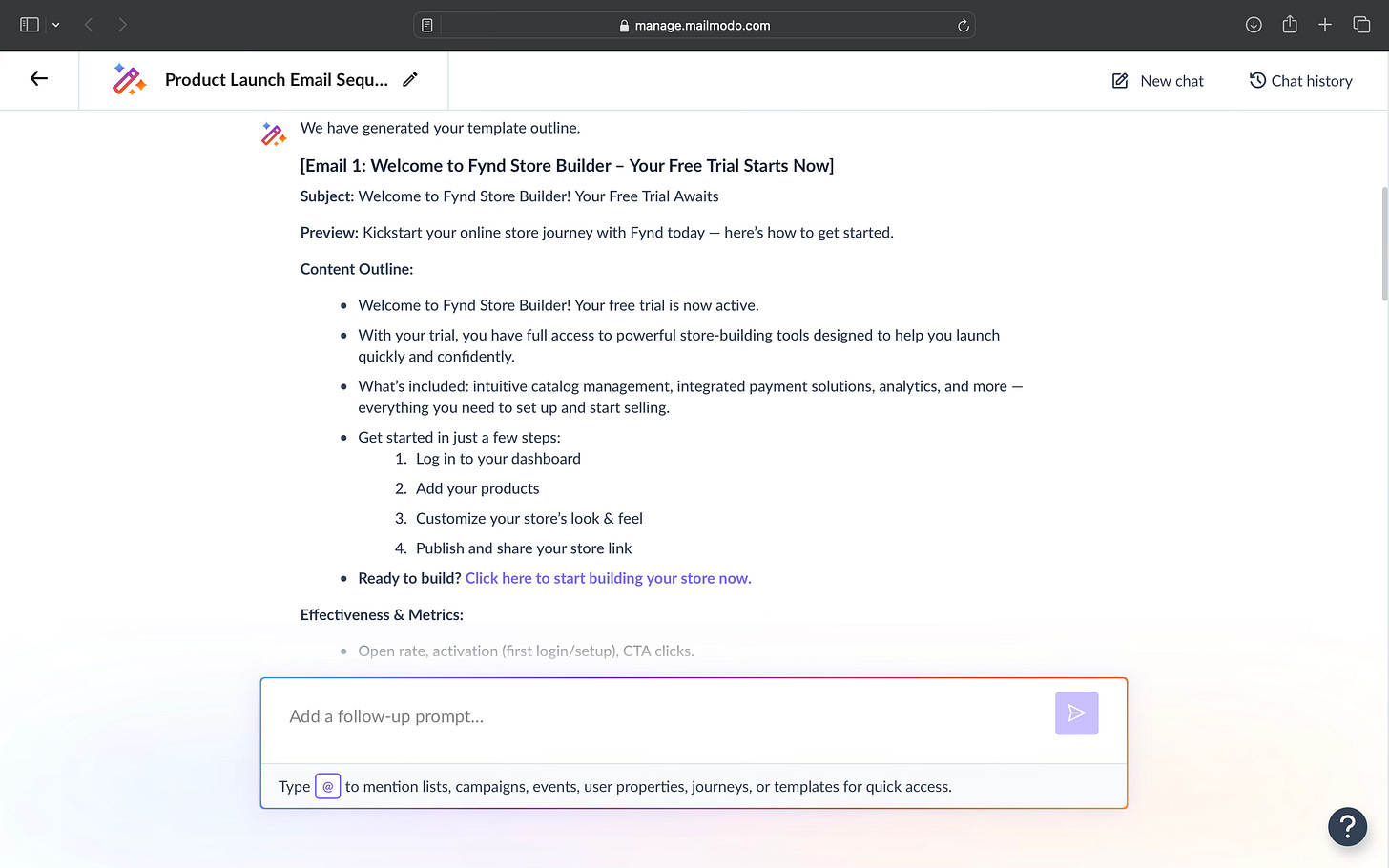

Email marketing has always been part strategy and part repetition. Mailmodo, best known for its interactive email marketing platform, has introduced a new AI campaign agent that claims to take over the repetitive side of the job, such as planning, writing, and optimizing email campaigns automatically. The idea is simple: marketers focus on strategy, while the AI handles execution. I decided to test how well that promise holds up in practice.

I gave it a simple prompt: “Create a 5-email launch sequence for Fynd Store Builder targeting small business owners on a 14-day trial.” Within seconds, it drafted a completed sequence, from welcome to feedback, with subject lines, preview text, CTAs, and performance metrics.

What’s interesting

Mailmodo’s AI understands the mechanics of email marketing better than most. The sequence it created followed a textbook product-led growth funnel: onboarding, informative, success stories, urgency, and feedback. Every email had a purpose, and it mapped neatly to the customer journey.

What stood out most was its ability to connect creative output to measurable goals like open rates, click-through rates, and conversions. It’s not just writing; it’s thinking about campaign outcomes. Compared to tools like Jasper or Copy.ai, which focus mostly on content generation, Mailmodo feels built for marketers who care about performance.

Still, the writing felt synthetic. It got the structure right but missed personality and emotional pull. It sounded like someone who knows the playbook, not the audience.

Where it works well

Mailmodo’s AI is ideal for marketers who need a fast, usable baseline. It can build a full draft sequence, write subject lines, and suggest tracking metrics in minutes. For small or busy teams, it’s a great way to get momentum and avoid the blank-page problem.

It’s also helpful for teams experimenting with AI-assisted marketing workflows. The structure it creates can serve as a reliable base for further creative editing and testing.

Where it falls short

The agent doesn’t yet understand ‘why’ a story connects. It doesn’t yet capture brand personality, emotional pacing, or the small touches that make an email sound human. You’ll still need to refine phrasing, adjust flow, and add empathy.

When compared to adaptive tools like Instantly.ai or Seventh Sense, which personalize tone and timing based on audience behavior, Mailmodo’s output feels more functional than expressive. It’s efficient but not yet intuitive.

What makes it different

I feel the real strength of Mailmodo is how it integrates AI across the entire email workflow. Most platforms separate creation, automation, and analysis, but this one ties them together. You can plan, draft, and optimize all within a single system. It does not reinvent the wheel, but it’s a thoughtful step toward simplifying marketing automation.

My take

Mailmodo’s AI campaign agent is not here to replace strategy but to remove friction. It handles structure, flow, and timing almost perfectly but leaves meaning and voice to you.

It’s not perfect, but it’s practical. And sometimes, a tool that quietly makes the process smoother is far more valuable than one that tries to reinvent it.

AI in Product

Shopify and OpenAI Make Chat the New Storefront

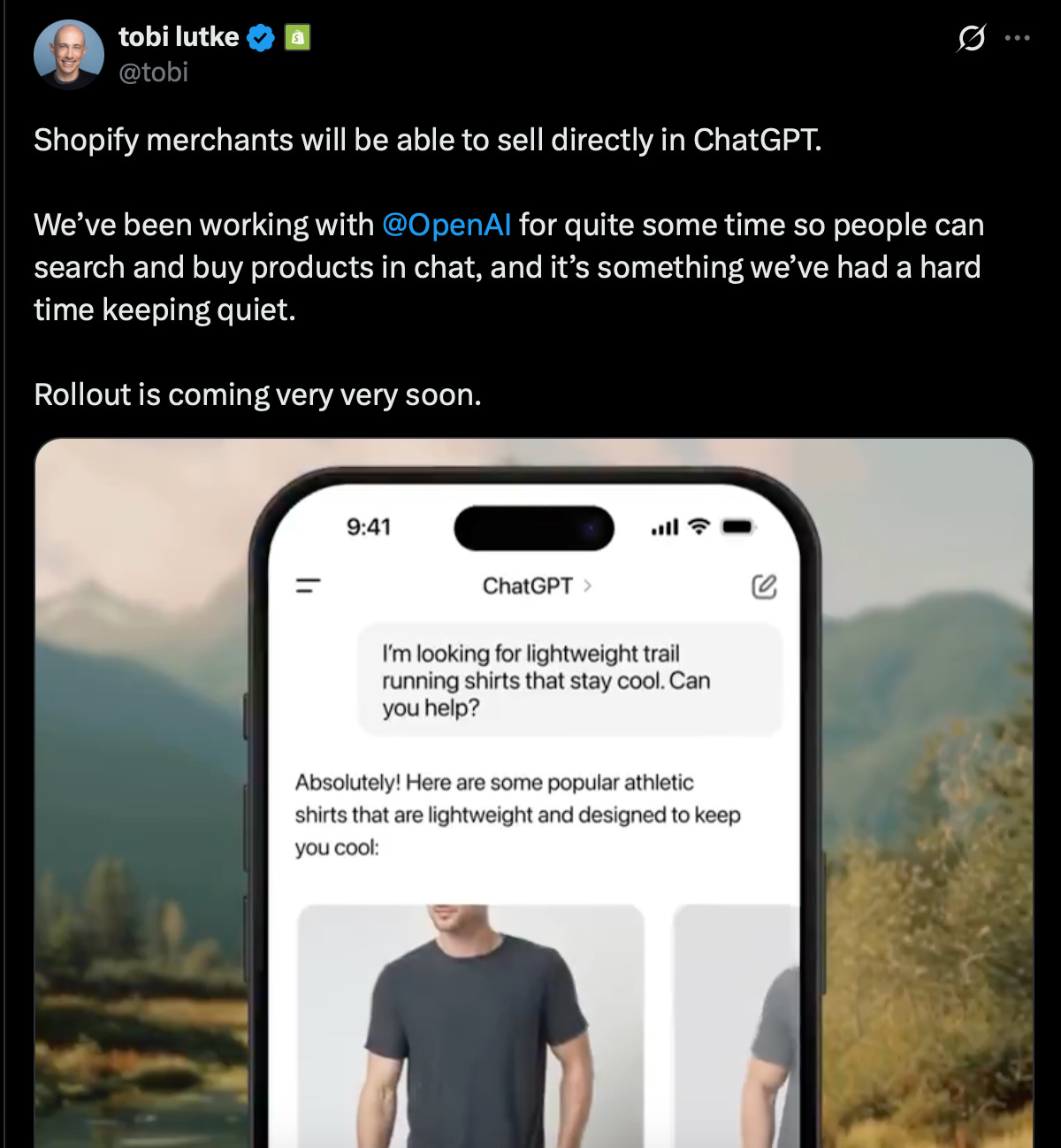

Shopify has partnered with OpenAI to let merchants sell directly inside ChatGPT, creating what might be the first real version of conversational commerce at scale. The idea is that when a user asks ChatGPT for product recommendations, it can now show live product listing from Shopify merchants, complete with price, images, and checkout options, without opening a new tab or visiting a website.

I haven’t run a full prompt test here (yet), but based on what Shopify and OpenAI are claiming, this feels like a turning point for conversational commerce. It’s a simple idea with massive implications: shopping that takes place entirely through conversation.

What’s interesting

The biggest payoff is convenience. Every redirect, every new tab, every login risks losing a customer. Collapsing those steps into a chat feels next-level. Merchants still control their branding and orders, and transactions continue to flow through the Shopify dashboard.

For now, the rollout focuses on single-item Instant Checkout, with support for multi-item carts releasing later. This suggests they want to test and refine the UX before expanding.

The most interesting part is how this shifts the role of AI from assistant to active seller. The chat doesn’t answer questions; it becomes the point of sale. For smaller merchants, this could also open up discovery opportunities that traditional search or ads often overlook, leveling the field in a context-driven marketplace. On platforms where visibility is tied to ad budgets and SEO rankings, this kind of conversational discovery could finally give independent sellers a fairer chance to be found. How much of that potential actually holds true is something we’ll have to see as adoption grows.

Where it works well

Perfect for direct or on-off purchases where users want quick results

Makes sense for practical queries like “good wireless chargers under ₹2,000”

Could help small brands surface alongside bigger ones by competing on context, not ad spend

Where it falls short

I feel this won’t work for everyone. The chat interface still limits product discovery and storytelling. It’s harder to showcase design, reviews, or brand identity in a text-first flow.

For many shoppers, the joy lies in wandering through options, comparing styles, and finding something they didn’t know they wanted. That feeling gets lost when everything becomes a direct, one-step transaction. It’s quick and convenient, but it also makes shopping feel a little too straightforward. It becomes more about getting it done than actually enjoying it.

It could also raise questions around data sharing and visibility. Merchants might still be left unclear about how much control they actually retain once the sale happens in an AI environment rather than on their site.

And compared to other AI-based commerce moves, such as Amazon’s voice shopping (Alexa) or Shopify’s earlier AI tools, this will need time to earn trust. Users may still prefer browsing before buying.

What makes it different

Shopify’s approach stands out because it treats conversational AI as an active sales channel. While Amazon’s Alexa and Google’s shopping experiments were mostly voice-based, this feels more tangible, especially for digital-first businesses already using Shopify.

it keeps merchants in charge of their data, payments, and fulfillment while letting AI handle discovery and interaction. That balance could make it more sustainable than other short-lived AI commerce experiments.

My take

If this works well, it could change the default path of online shopping, from “search, browse, click” to “ask, get, buy.” But it won’t replace your website or brand experience soon.

Shopify’s move isn’t about replacing storefronts. It’s about meeting customers where they already are - inside conversations.

AI in Design

AI Video Gets Its Mood Board: Inside Meta’s Vibes

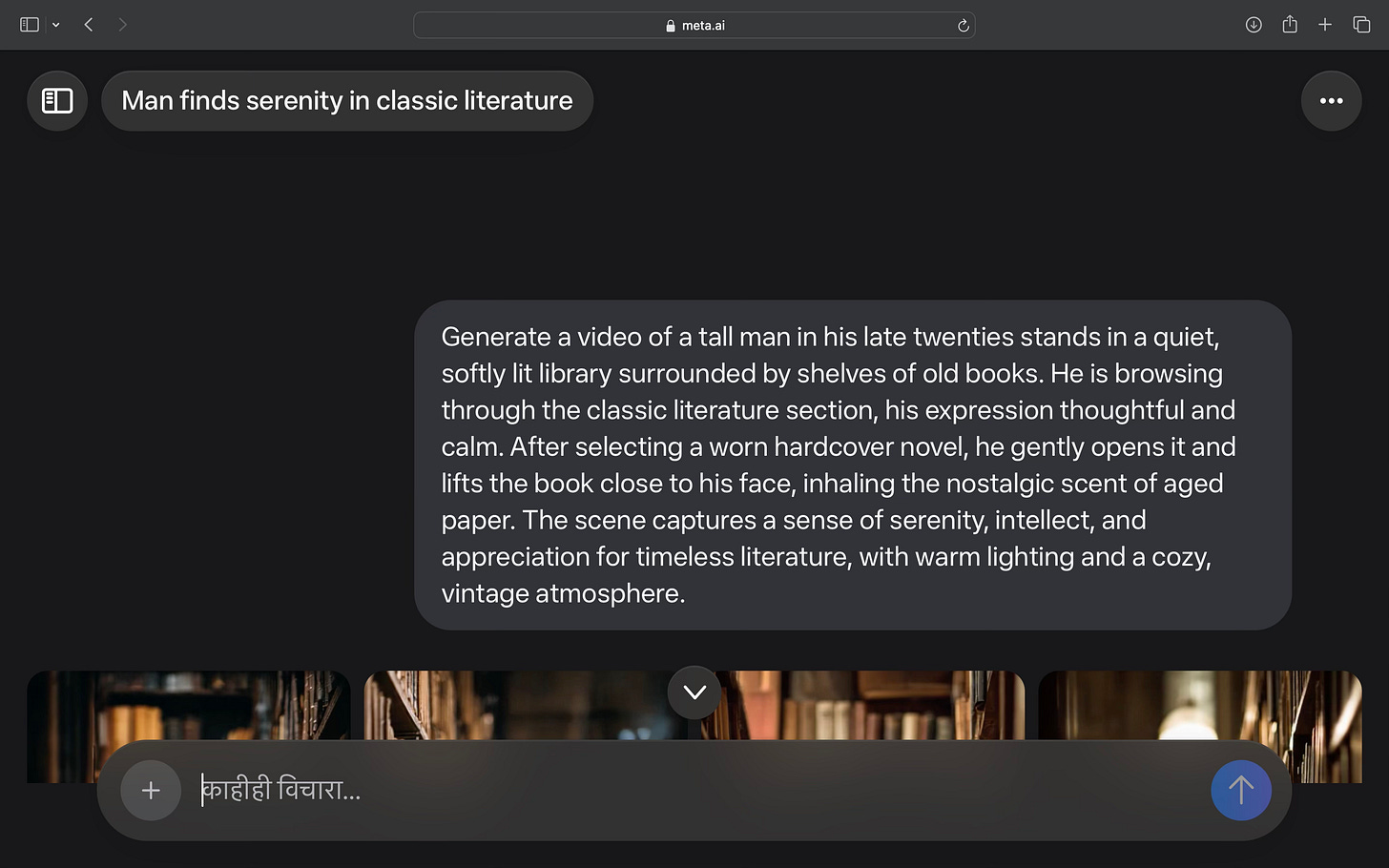

Meta has launched Vibes, an AI video tool that turns simple text prompts into short, cinematic clips. It is designed to help anyone create visually rich, emotionally aware videos without technical skill. What makes it stand out is how it blends creation and discovery in one place. You can browse trending videos, remix existing ones, add music, and even save your own clips to a personal feed.

I tried it with a scene prompt: “A tall man in his late twenties stands in a softly lit library surrounded by old books.” The goal was to test whether Vibes could capture the atmosphere rather than just generate motion.

What’s interesting

The output was better than I expected. The lighting felt warm, the pacing was calm, and the overall tone was exactly what I had in mind. It captured the quiet focus of the moment rather than just showing random movement. The transitions between frames were smooth, and the camera angles had intention.

The real surprise, though, came after generation. Vibes lets you add music, either from trending soundtracks, through a search option, or from a list of saved tracks. It completely changes how the video feels. A mellow piano track turned the scene poetic, while an ambient instrumental made it feel cinematic

The ability to experiment instantly with sound, style, and mood is what gives Vibes depth. It’s not just generating video but composing an experience.

Where it works well

Vibes performs best when the prompt centers on mood or setting rather than complex actions. It excels in scenes that rely on light, texture, and pace. The interface is simple and intuitive where you can switch between styles, try different soundtracks, or remix your own video in seconds.

It is particularly useful for designers and creative teams who want to visualize storyboards, brand moods, or ad concepts quickly. The remixing feature also opens rooms for iteration. You can take a generated clip, tweak the style or music, and instantly see a new version without redoing the whole process.

Where it falls short

Its realism still has limits. Human motion can look slightly mechanical, and facial details are inconsistent. If you pause a frame, you notice imperfections. Long or complex scenes sometimes lose continuity.

There is also limited control beyond text prompts. You cannot yet fine-tune camera angles, expressions, or lighting direction. Compared to tools like Runway Gen-3 or Veo 3, Vibes feels more creative than technical. It focuses on expression, not precision.

The social feed format is both a strength and a weakness. It encourages remixing and discovery but can make the output feel repetitive or formulaic after a while.

What makes it different

While most AI video platforms aim for realism, Meta seems to be chasing emotion and accessibility. Vibes isn’t built for filmmakers; it’s built for creators who want to communicate a feeling. The built-in music library, trending feed, and quick remix tools make it less of a production platform and more of a creative playground.

My take

Vibes may not replace professional tools yet, but it does something few others manage. It makes AI videos feel personal. It understands tone and ambiance better than most of its competitors. For moodboards, design storytelling, and early concept development, it is genuinely useful. It still needs more control and refinement, but the intent is right.

In the Spotlight

Recommended listen: ChatGPT–Shopify Integration & the Future of Chat-Native Commerce - a sharp take on how embedded checkout in ChatGPT will shift ecommerce to ask → compare → confirm → pay, and why brands must make pages machine-readable and avatar-specific.

“You need to position yourself and your company in a way where you are using the Google search of ChatGPT.”

- Sasha Karabut • ~9:20

This Week in AI

A quick roundup of stories shaping how AI and AI agents are evolving across industries:

OpenAI partners with Broadcom to develop custom AI chips, reducing dependence on external hardware suppliers.

Salesforce expands Agentforce 360 with deeper OpenAI and Anthropic integrations, enabling smarter, autonomous enterprise agents.

Google debuts Gemini 2.5 “Computer Use” model, giving AI agents the ability to navigate and interact directly with web interfaces.

AI Out of Office

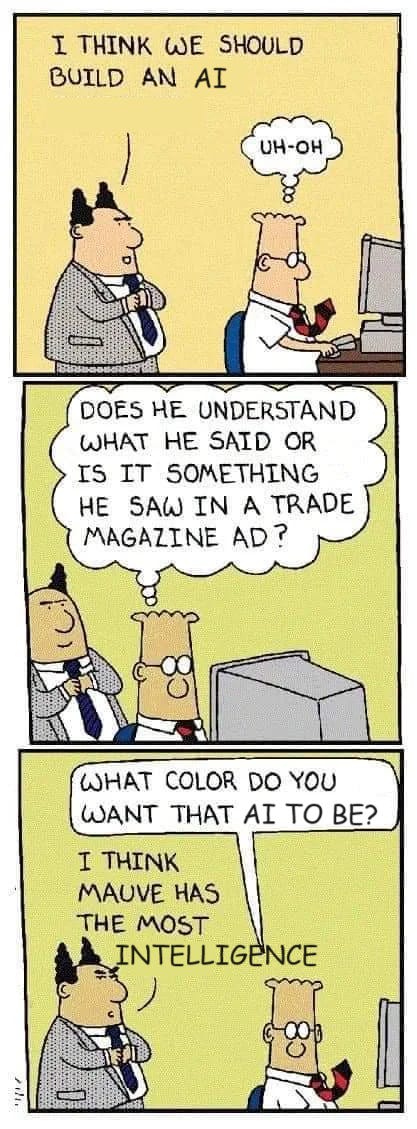

Source: Reddit

AI Fynds

A curated mix of AI tools that make work more efficient and creativity more accessible.

Hailuo → A new conversational writing assistant that blends research, outlining, and drafting in one flow, helping teams co-create long-form content faster.

Synthesis → A collaboration platform for AI-first teams that connects agents, data, and workflows into unified operational pipelines.

AI Text-to-Video Generator → Instantly convert written prompts into cinematic videos with realistic motion and lighting, built for quick creative prototyping.

Closing Notes

That’s it for this edition of AI Fyndings. From campaign assistants to conversational storefronts and creative video tools, AI continues to move quietly into the spaces where we work and create.

Thanks for reading! See you next week with more shifts, tools, and ideas from the world of AI.

With love,

Elena Gracia

AI Marketer @ Fynd